A new study from researchers at the Allen Institute collected and analyzed the largest single dataset of neurons’ electrical activity to glean principles of how we perceive the visual world around us. The study, published Wednesday in the journal Nature, captures the hundreds of split-second electrical signals that fire when an animal is interpreting what it sees.

Your brain processes the world around you nearly instantaneously, but there are numerous lightning-fast steps between light hitting your retinas and the point at which you become aware of what’s in front of you. Humans have three dozen different brain areas responsible for understanding the visual world, and scientists still don’t know many of the details of how that process works.

“At a very high level, we want to understand why we need to have multiple visual areas in our brain in the first place,” said Josh Siegle, Ph.D., Assistant Investigator in the Allen Institute’s MindScope Program. “How are each of these areas specialized, and then how do they communicate with each other and synchronize their activity to effectively guide your interactions with the world?”

In the new study, Siegle and other MindScope researchers Xiaoxuan Jia, Ph.D., Senior Scientist; Shawn Olsen, Ph.D., Associate Investigator; and Christof Koch, Ph.D., Chief Scientist led a team of researchers to pin down some of those details.

The Allen Institute team turned to the mouse, whose lima-bean-sized brain is still incredibly complicated. Mouse vision is not the same as ours—for one, they rely more heavily on other senses than we do—but neuroscientists believe they can still learn many general principles about sensory processing from studying these animals.

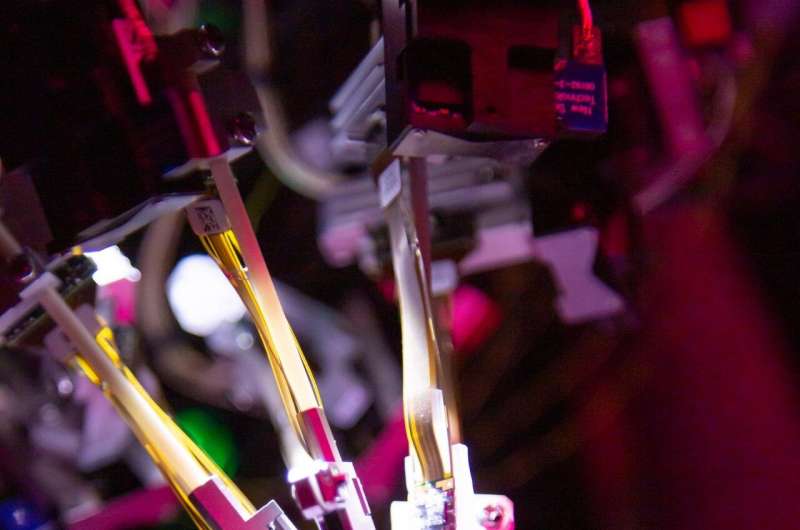

Using Neuropixels, high-resolution silicon probes thinner than a human hair that read out activity from hundreds of neurons at once, the team built a public dataset of electrical spikes from approximately 100,000 neurons in the mouse brain.

Not only is this dataset the largest collection of neurons’ electrical activity in the world, but each experiment in the database captured information from hundreds of brain cells from up to eight different visual regions of the brain at once. Reading electrical activity simultaneously across different areas of the brain allowed the scientists to trace visual signals in real-time as they passed from the mouse’s eyes to higher regions of its brain.

The researchers found that visual information travels along a ‘hierarchy’ through the brain, in which lower areas represent simpler visual concepts like light and dark, while neurons at the top of the hierarchy are capturing more complex ideas, like the shape of objects.

“Historically, people have studied one brain region at a time, but the brain doesn’t mediate behavior and cognition with just one area alone,” said Olsen. “We’re learning that the brain operates through the interaction of areas and signals sent from one area to another, but technical limitations have prevented us from studying this in depth in the past. We really needed the integrated view that this dataset provides to start to understand how that works.”

Tracing the brain’s traffic patterns

The Neuropixels study built off a previous Allen Institute study that mapped the mouse brain’s wiring diagram, the physical connections made by bundles of axons between many different areas of the brain. With data from the Allen Mouse Brain Connectivity Atlas, that study traced thousands of connections within and between the thalamus and cortex, the outermost shell of the mammalian brain that is responsible for higher level functions, including processing the visual world.

If the connectivity data is like the brain’s road map, the Neuropixels dataset is akin to tracking traffic patterns in the brain, Koch said. Even though signals in the brain move in a split second from one region to the next, the probes are sensitive enough to detect very slight time delays that let the scientists draw a real-time map of the route visual information takes in the brain. By comparing the Neuropixels data with the connectivity data, the scientists can get a clearer picture of how information moves along neural roadways.

“It’s as if we’re trying to map how cities are connected by watching the movement of cars on the road,” Koch said. “If we see a car in Seattle and then a few hours later we see that same car in Spokane and then much later we see the car in Minneapolis, then we have an idea that the connection from Seattle to Minneapolis has to pass through Spokane on the way.”

Like the roads in a country, the wiring map of a brain is not a simple structure. There are many different, parallel connections between two brain areas, even two neighboring areas. And like our system of interstate highways, arterial roads, and smaller roads, the brain has stronger and weaker connections. Just knowing the physical map isn’t enough to predict the route of visual information.

The researchers were able to map the signals onto a hierarchy using the time delays they observed in neural activity between different brain regions. They also used other measures to confirm the hierarchy, including the size of visual field each neuron responds to. Cells lower in the hierarchy are tuned to smaller portions of the animal’s visual world, while the higher-level neurons react to larger regions of visual space, presumably because those cells are integrating more information about an entire picture in front of the animal.

A critical process

The scientists captured neural activity both when animals were viewing different photos and simple images, and in mice trained to respond to an image change in front of their eyes by licking a tiny waterspout. They saw that information traveled in the brain across the same hierarchical path in both situations. When mice were trained to respond to a visual change, their visual neurons also altered their activity, and those cells higher in the hierarchy showed even larger changes.

The scientists could even tell just from looking at the neural activity whether or not a particular animal had successfully detected a change in the image.

And if the researchers turned out all the lights—giving the animals no visual input—many of the same visual neurons still fired, albeit more slowly, but the order of information flow was lost. This could mean that the hierarchy is needed to process visual information, but the animals use the same cells for other purposes in a different circuit.

Although these kinds of detailed experiments are not possible in humans, studies looking at general brain activity have seen a similar sort of hierarchy—and changes in brain activity—in the parts of our brains responsible for sound and visual processing. Neuroscientists believe this type of hierarchical processing is used throughout the brain to understand many aspects of the world around us, not just what we see.

Source: Read Full Article